Once you have decided what study characteristics to code, the next step, of course, is to do it—to carefully read obtained reports and to record information about the studies. The information recorded is that regarding both the study characteristics you have decided to code (see previous two sections) and the effect sizes. I defer discussion of computing effect sizes until Chapter 5, but the same principles of evaluating coding decisions of effect sizes apply as for coding study characteristics that I describe in this section.

Two important qualities of your coding system are the related concepts of transparency and replicability (Wilson, 2009). In addition to these qualities, it is also important to consider the reliability of your coding.

1. Transparency and Replicability of Coding

When writing or otherwise presenting your meta-analysis, you should provide enough details of the coding process that your audience knows exactly how you made coding decisions (transparency) and, at least in principle, could reach the same decisions as you did if they were to apply your coding strategy to studies included in your meta-analysis (replicability). To achieve these principles of transparency and replicability, it is important to describe fully how each study characteristic is quantified.

Some study characteristics are coded in a straightforward way; the characteristics that require little or no judgment decisions on the part of the coder are sometimes termed “low inference codes” (e.g., Cooper, 2009b, p. 33). For example, coding the mean age of the sample will usually involve simply recording information stated in the research reports. You should fully describe even such simple coding (e.g., that you recorded age in years). In my experience, however, even such seemingly simple study characteristics yield complexities. For example, a study might report an age range (from which you might record the midpoint) or a proxy such as grade in school (from which you might estimate a likely age). Ideally, your original coding plan will have ways of determining a reasonable value from such information, or you might have to make these decisions as the unexpected decisions arise. In either case, it is important to report these rules to ensure the transparency of your coding process.6

When study characteristics are less obvious (i.e., high inference coding, in which the coder must make judgment decisions), it is critical to fully report the coding process to ensure transparency and replicability (and this process should already be written to ensure reliability of coding; discussed next in Section 4.3.2). For example, if you have decided to code for types of measures or designs of the studies, you should report the different values for this categorical code and define each of the categories. During the planning stages, you should consider whether it is possible to reduce a high inference code into a series of more specific low inference codes.7

2. Reliability of Coding

One way to evaluate empirically the replicability of your coding system is to assess the reliability of independent efforts of coding the same studies. You can evaluate this reliability either between coders (intercoder reliability) or within the same coder (intracoder reliability; Wilson, 2009).

Intercoder reliability is assessed by having two coders from the coding team independently code a subset of overlapping studies. The coders should be unaware of which studies each other is coding because an awareness of this fact is likely to increase the vigilance of coding and therefore provide an overestimate of the actual reliability. The number of doubly coded studies should be large enough to ensure a reasonably precise estimate of reliability. Lipsey and Wilson (2001, p. 86) recommended 20 to 50 studies, and your decision to choose a number within this range might depend on your perception of the level of inference of the coding. If your protocol calls for low inference coding, then a lower number should suffice in confirming intercoder agreement, whereas higher inference coding would necessitate a higher number of overlapping studies to more precisely quantify this agreement.

Intracoder agreement is assessed by having the same person code a subset of studies twice. Because it is likely that the coder will be aware of previously coding the study, it is not possible to conceal the studies used to assess this reliability. However, if the coder is unaware during the first coding trials of which studies they will recode (e.g., a random sample of studies is selected for recoding after the initial coding is completed), the overestimation of reliability is likely reduced. One reason for assessing intracoder agreement is to evaluate potential “drift”—changes in the coding process over time that could come about from the coding experience, increasing biases from “expecting” certain results and/or fatigue. A second reason is that it is not possible to assess intercoder agreement. This inability to assess intercoder agreement is certainly a realistic possibility if you are conducting the meta-analysis alone and you have no colleagues with sufficient expertise or time to code a subset of studies. Intracoder agreement is not a perfect substitute for intercoder agreement because one coder might hold potential biases or consistently make the same coding errors during both coding sessions. However, it can serve as reasonable evidence of reliability if efforts are made to ensure the independence of the coding sessions. For example, the coder should work with unmarked copies of the studies (not with copies containing notes from the previous coding), and the coding sessions should be separated by as much time as practical.

Using either an intercoder or intracoder approach, it is useful to report the reliability of each coded study characteristic (i.e., each study characteristic, artifact correction, and effect size), just as you would report the reliability for each variable in a primary study. It is deceptive to report only a single reliability across codes, as this might obscure important differences in coding reliability across study characteristics (Yeaton & Wortman, 1993). Several indices are available for quantifying this reliability (see Orwin & Vevea, 2009), three of which are agreement rates, Cohen’s kappa, and Pearson correlation.8

2.1. Agreement Rate

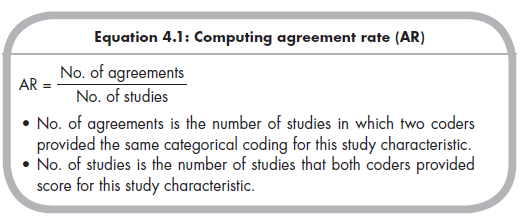

The most common index is the agreement rate (AR), which is simply the proportion of studies on which two coders (or single coder on two occasions) assign the same categorical code (Equation 4.1; from Orwin & Vevea, 2009, p. 187):

The agreement rate is simple and intuitive, and for these reasons is the most commonly reported index of coding reliability. At the same time, there are limitations to this index; namely, it does not account for base rates of coding (i.e., some values are coded more often than others) and the resulting chance levels of agreement.

2.2. Cohen’s Kappa

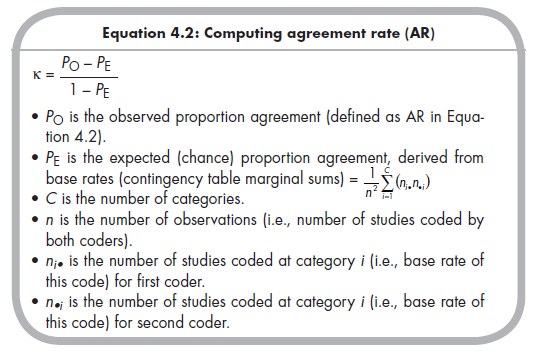

An alternative index for reliability of categorical codes is Cohen’s kappa (k), which does account for chance level agreement depending on base rates for coding. Kappa is estimated using Equation 4.2 (from Orwin & Vevea, 2009, pp. 187-188):

Cohen’s kappa is a very useful index of coding reliability for study characteristics with nominal categorical levels. When used with ordinal coding, it has the limitation of not distinguishing between “close” and “far” disagreements (e.g., a close agreement might be two coders recording 4 and 5 on a 5-point ordinal scale, whereas far disagreement might be two coders recording 1 and 5 on this scale). However, ordinal coding can be accommodated by using a weighted kappa index (see Orwin & Vevea, 2009, p. 188). The major limitation of using kappa is that it requires a fairly large number of studies to produce precise estimates of reliability. Although I cannot provide concrete guidelines as to how many studies is “enough” (because this also depends on the distributions of the base rate), I suggest that you use either the upper end of Lipsey and Wilson’s recommendations (i.e., about 40 to 50 studies) or all studies in your meta-analysis if it is important to obtain a precise estimate of coding reliability in your meta-analysis.

2.3. Pearson Correlation

When study characteristics are coded continuously or on an ordinal scale with numerous categories, a useful index of reliability is the Pearson correlation (r) between the two sets of coded values. One caveat is that the correlation coefficient does not evaluate potential mean differences between coders/ coding occasions. For example, two coders might exhibit a perfect correlation between their recorded scores of mean ages of samples, but one coded the values in years and the other in months. This discrepancy would obviously be problematic in using the coded study characteristic “mean age of sample” for either descriptive purposes or moderator analyses. Given this limitation of the correlation coefficient, I suggest also examining difference scores, or equivalently, performing a repeated-measures (a.k.a. paired samples) £-test to ensure that such discrepancies have not emerged.

Source: Card Noel A. (2015), Applied Meta-Analysis for Social Science Research, The Guilford Press; Annotated edition.

24 Aug 2021

24 Aug 2021

25 Aug 2021

24 Aug 2021

24 Aug 2021

25 Aug 2021