Publication bias refers to the possibility that studies finding null (absence of statistically significant effect) or negative (statistically significant effect in opposite direction expected) results are less likely to be published than studies finding positive effects (statistically significant effects in expected direction).1 This bias is likely due both to researchers being less motivated to submit null or negative results for publication and to journals (editors and reviewers) being less likely to accept manuscripts reporting these results (Cooper, DeNeve, & Charlton, 1997; Coursol & Wagner, 1986; Greenwald, 1975; Olson et al., 2002).

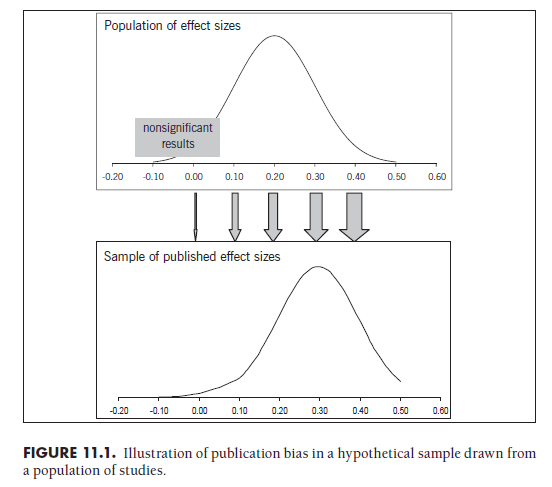

The impact of this publication bias is that the published literature might not be representative of the studies that have been conducted on a topic, in that the available results likely show a stronger overall effect size than if all studies were considered. This impact is illustrated in Figure 11.1, which is a reproduction of Figure 3.2. The top portion of this figure shows a distribution of effect sizes from a hypothetical population of studies. The effect sizes from these studies center around a hypothetical mean effect size (about 0.20), but have a certain distribution of effect sizes found due to random-sampling error and, potentially, population-level between-study variance (i.e., heterogeneity; see Chapters 8 and 9). Among those studies that happen to find small effect sizes, results are less likely to be statistically significant (in this hypothetical figure, I have denoted this area where studies find effect sizes less than ±0.10, with the exact range depending on the study sample sizes and effect size considered). Below this population of effect sizes of all studies conducted, I have drawn downward arrows of different thicknesses to represent the different likelihoods of the study being published, with thicker arrows denoting higher likelihood of publication. Consistent with the notion of publication bias, the hypothetical studies that fail to find significant effects are less likely to be published than those that do. This differential publication rate results in the distribution of published studies shown in the lower part of Figure 11.1. It can be seen that this distribution is shifted to the right, such that the mean effect size is now approximately 0.30. If the meta-analysis only includes this biased sample of published studies, then the estimate of the mean effect size is going to be considerably higher (around 0.30) than that in the true population of studies conducted. Clearly, this has serious implications for a meta-analysis that does not consider publication bias.

This publication bias is sometimes referred to by alternative names. Some have referred to it as the “file-drawer problem” (Rosenthal, 1979), conjuring images of researchers’ file drawers containing manuscripts reporting null or negative (i.e., in the opposite direction expected) results that will never be seen by the meta-analyst (or anyone else in the research community). Another term proposed is “dissemination bias” (see Rothstein, Sutton, & Borenstein, 2005a). This latter term is more accurate in describing the broad scope of this problem, although the term “publication bias” is the more commonly used one (Rothstein et al., 2005a). Regardless of terminology used, the breadth of this bias is not limited just to significant results being published and nonsignificant results not being published (even in a probabilistic rather than absolute sense). One source of breadth of the bias is the existence of “gray literature,” research that is between the file drawer and publication, such as in the format of conference presentations, technical reports, or obscure publication outlets (Conn, Valentine, Cooper, & Rantz, 2003; Hopewell, Clarke, & Mallett, 2005; also referred to as “fugitive literature” by, e.g., M. C. Rosenthal, 1994). There is evidence that null findings are more likely to be reported only in these more obscure outlets than are positive findings (see Dickersin, 2005; Hopewell et al., 2005) If the literature search is less exhaustive, these reports are less likely to be found and included in the meta-analysis than reports published in more prominent outlets.

Another source of breadth in publication bias may be in the underemphasis of null or negative results. For example, researchers are likely to make significant findings the centerpiece of an empirical report and only report nonsignificant findings in a table. Such publications, though containing the effect size of interest, might not be detected in key word searches or in browsing the titles of published works. Similarly, null or counterintuitive findings that are published may be less likely to be cited by others; thus, backward searches are less likely to find these studies.

Finally, an additional source of breadth in considering publication bias is due to the time lag of publication. There is evidence, at least in some fields, that significant results are published more quickly than null or negative results (see Dickersin, 2005). The impact on meta-analyses, especially those focusing on topics with a more recently created empirical basis, is that the currently published results are going to overrepresent significant positive findings, whereas null or negative results are more likely to be published after the meta-analysis is performed.

Recognizing the impact and breadth of publication bias is important but does not provide guidance in managing it. Ideally, the scientific process would change so that researchers are obligated to report the results of study findings.2 In clinical research, the establishment of clinical trial registries (in which researchers must register a trial before beginning the study, with some journals motivating registration by only considering registered trials for publication) represents a step in helping to identify studies, although there are some concerns that registries are incomplete and that the researchers of registered trials may be unwilling to share unexpected results (Berlin & Ghersi, 2005). However, unless you are in the position to mandate research and reporting practices within your field, you must deal with publication bias without being able to prevent it or even fully know of its existence. Nevertheless, you do have several methods of evaluating the likely impact publication bias has on your meta-analytic results.

Source: Card Noel A. (2015), Applied Meta-Analysis for Social Science Research, The Guilford Press; Annotated edition.

24 Aug 2021

24 Aug 2021

24 Aug 2021

25 Aug 2021

24 Aug 2021

25 Aug 2021