Businesses have an array of technologies for protecting their information resources. They include tools for managing user identities, preventing unauthorized access to systems and data, ensuring system availability, and ensuring software quality.

1. Identity Management and Authentication

Midsize and large companies have complex IT infrastructures and many systems, each with its own set of users. Identity management software automates the process of keeping track of all these users and their system privileges, assigning each user a unique digital identity for accessing each system. It also includes tools for authenticating users, protecting user identities, and controlling access to system resources.

To gain access to a system, a user must be authorized and authenticated. Authentication refers to the ability to know that a person is who he or she claims to be. Authentication is often established by using passwords known only to authorized users. An end user uses a password to log on to a computer system and may also use passwords for accessing specific systems and files. However, users often forget passwords, share them, or choose poor passwords that are easy to guess, which compromises security. Password systems that are too rigorous hinder employee productivity. When employees must change complex passwords frequently, they often take shortcuts, such as choosing passwords that are easy to guess or keeping their passwords at their workstations in plain view. Passwords can also be sniffed if transmitted over a network or stolen through social engineering.

New authentication technologies, such as tokens, smart cards, and biometric authentication, overcome some of these problems. A token is a physical device, similar to an identification card, that is designed to prove the identity of a single user. Tokens are small gadgets that typically fit on key rings and display passcodes that change frequently. A smart card is a device about the size of a credit card that contains a chip formatted with access permission and other data. (Smart cards are also used in electronic payment systems.) A reader device interprets the data on the smart card and allows or denies access.

Biometric authentication uses systems that read and interpret individual human traits, such as fingerprints, irises, and voices to grant or deny access. Biometric authentication is based on the measurement of a physical or behavioral trait that makes each individual unique. It compares a person’s unique characteristics, such as the fingerprints, face, voice, or retinal image, against a stored profile of these characteristics to determine any differences between these characteristics and the stored profile. If the two profiles match, access is granted. Fingerprint and facial recognition technologies are just beginning to be used for security applications, with many PC laptops (and some smartphones) equipped with fingerprint identification devices and some models with built-in webcams and face recognition software. Financial service firms such as Vanguard and Fidelity have implemented voice authentication systems for their clients.

The steady stream of incidents in which hackers have been able to access traditional passwords highlights the need for more secure means of authentication. TWo-factor authentication increases security by validating users through a multistep process. To be authenticated, a user must provide two means of identification, one of which is typically a physical token, such as a smartcard or chip-enabled bank card, and the other of which is typically data, such as a password or personal identification number (PIN). Biometric data, such as fingerprints, iris prints, or voice prints, can also be used as one of the authenticating mechanisms. A common example of two-factor authentication is a bank card; the card itself is the physical item, and the PIN is the other piece of data that goes with it.

2. Firewalls, Intrusion Detection Systems, and Anti-malware Software

Without protection against malware and intruders, connecting to the Internet would be very dangerous. Firewalls, intrusion detection systems, and antimalware software have become essential business tools.

2.1. Firewalls

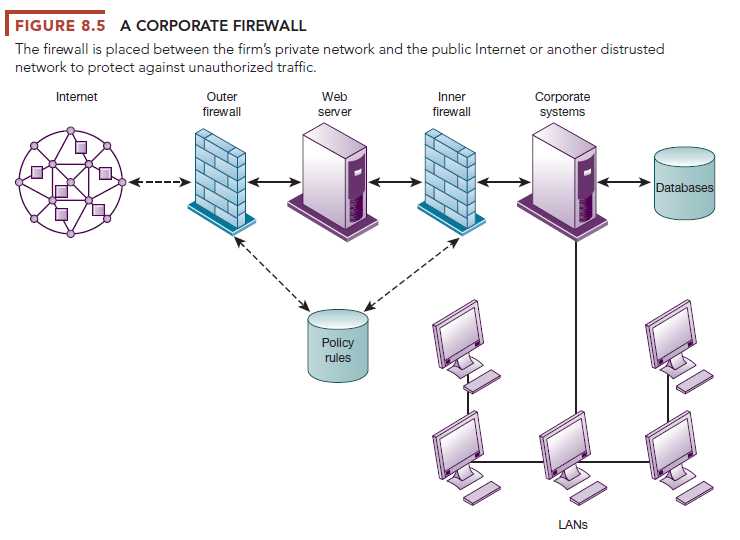

Firewalls prevent unauthorized users from accessing private networks. A firewall is a combination of hardware and software that controls the flow of incoming and outgoing network traffic. It is generally placed between the organization’s private internal networks and distrusted external networks, such as the Internet, although firewalls can also be used to protect one part of a company’s network from the rest of the network (see Figure 8.5).

The firewall acts like a gatekeeper that examines each user’s credentials before it grants access to a network. The firewall identifies names, IP addresses, applications, and other characteristics of incoming traffic. It checks this information against the access rules that the network administrator has programmed into the system. The firewall prevents unauthorized communication into and out of the network.

In large organizations, the firewall often resides on a specially designated computer separate from the rest of the network, so no incoming request directly accesses private network resources. There are a number of firewall screening technologies, including static packet filtering, stateful inspection, Network Address Translation, and application proxy filtering. They are frequently used in combination to provide firewall protection.

Packet filtering examines selected fields in the headers of data packets flowing back and forth between the trusted network and the Internet, examining individual packets in isolation. This filtering technology can miss many types of attacks.

Stateful inspection provides additional security by determining whether packets are part of an ongoing dialogue between a sender and a receiver. It sets up state tables to track information over multiple packets. Packets are accepted or rejected based on whether they are part of an approved conversation or attempting to establish a legitimate connection.

Network Address Translation (NAT) can provide another layer of protection when static packet filtering and stateful inspection are employed. NAT conceals the IP addresses of the organization’s internal host computer(s) to prevent sniffer programs outside the firewall from ascertaining them and using that information to penetrate internal systems.

Application proxy filtering examines the application content of packets. A proxy server stops data packets originating outside the organization, inspects them, and passes a proxy to the other side of the firewall. If a user outside the company wants to communicate with a user inside the organization, the outside user first communicates with the proxy application, and the proxy application communicates with the firm’s internal computer. Likewise, a computer user inside the organization goes through the proxy to talk with computers on the outside.

To create a good firewall, an administrator must maintain detailed internal rules identifying the people, applications, or addresses that are allowed or rejected. Firewalls can deter, but not completely prevent, network penetration by outsiders and should be viewed as one element in an overall security plan.

2.2. Intrusion Detection Systems

In addition to firewalls, commercial security vendors now provide intrusion detection tools and services to protect against suspicious network traffic and attempts to access files and databases. Intrusion detection systems feature full-time monitoring tools placed at the most vulnerable points or hot spots of corporate networks to detect and deter intruders continually. The system

generates an alarm if it finds a suspicious or anomalous event. Scanning software looks for patterns indicative of known methods of computer attacks such as bad passwords, checks to see whether important files have been removed or modified, and sends warnings of vandalism or system administration errors. The intrusion detection tool can also be customized to shut down a particularly sensitive part of a network if it receives unauthorized traffic.

2.3. Anti-malware Software

Defensive technology plans for both individuals and businesses must include anti-malware protection for every computer. Anti-malware software prevents, detects, and removes malware, including computer viruses, computer worms, Trojan horses, spyware, and adware. However, most anti-malware software is effective only against malware already known when the software was written. To remain effective, the software must be continually updated. Even then it is not always effective because some malware can evade detection. Organizations need to use additional malware detection tools for better protection.

2.4. Unified Threat Management Systems

To help businesses reduce costs and improve manageability, security vendors have combined into a single appliance various security tools, including firewalls, virtual private networks, intrusion detection systems, and web content filtering and anti-spam software. These comprehensive security management products are called unified threat management (UTM) systems. UTM products are available for all sizes of networks. Leading UTM vendors include Fortinent, Sophos, and Check Point, and networking vendors such as Cisco Systems and Juniper Networks provide some UTM capabilities in their products.

3. Securing Wireless Networks

The initial security standard developed for Wi-Fi, called Wired Equivalent Privacy (WEP), is not very effective because its encryption keys are relatively easy to crack. WEP provides some margin of security, however, if users remember to enable it. Corporations can further improve Wi-Fi security by using it in conjunction with virtual private network (VPN) technology when accessing internal corporate data.

In June 2004, the Wi-Fi Alliance industry trade group finalized the 802.11i specification (also referred to as Wi-Fi Protected Access 2 or WPA2) that replaces WEP with stronger security standards. Instead of the static encryption keys used in WEP, the new standard uses much longer keys that continually change, making them harder to crack. The most recent specification is WPA3, introduced in 2018.

4. Encryption and Public Key Infrastructure

Many businesses use encryption to protect digital information that they store, physically transfer, or send over the Internet. Encryption is the process of transforming plain text or data into cipher text that cannot be read by anyone other than the sender and the intended receiver. Data are encrypted by using a secret numerical code, called an encryption key, that transforms plain data into cipher text. The message must be decrypted by the receiver.

Two methods for encrypting network traffic on the web are SSL and S-HTTP. Secure Sockets Layer (SSL) and its successor, Transport Layer Security (TLS), enable client and server computers to manage encryption and decryption activities as they communicate with each other during a secure web session. Secure Hypertext Transfer Protocol (S-HTTP) is another protocol used for encrypting data flowing over the Internet, but it is limited to individual messages, whereas SSL and TLS are designed to establish a secure connection between two computers.

The capability to generate secure sessions is built into Internet client browser software and servers. The client and the server negotiate what key and what level of security to use. Once a secure session is established between the client and the server, all messages in that session are encrypted.

Two methods of encryption are symmetric key encryption and public key encryption. In symmetric key encryption, the sender and receiver establish a secure Internet session by creating a single encryption key and sending it to the receiver so both the sender and receiver share the same key. The strength of the encryption key is measured by its bit length. Today, a typical key will be 56 to 256 bits long (a string of from 56 to 256 binary digits) depending on the level of security desired. The longer the key, the more difficult it is to break the key. The downside is that the longer the key, the more computing power it takes for legitimate users to process the information.

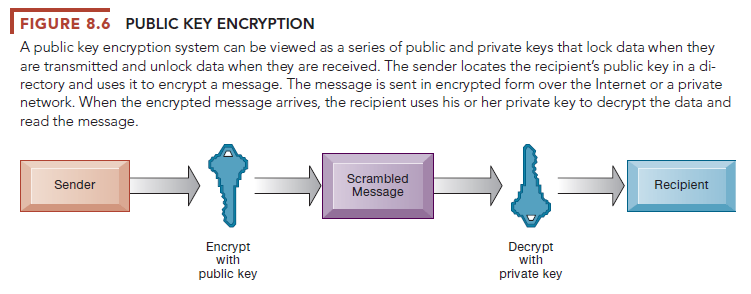

The problem with all symmetric encryption schemes is that the key itself must be shared somehow among the senders and receivers, which exposes the key to outsiders who might just be able to intercept and decrypt the key. A more secure form of encryption called public key encryption uses two keys: one shared (or public) and one totally private as shown in Figure 8.6. The keys are mathematically related so that data encrypted with one key can be decrypted using only the other key. To send and receive messages, communicators first create separate pairs of private and public keys. The public key is kept in a directory, and the private key must be kept secret. The sender encrypts a message with the recipient’s public key. On receiving the message, the recipient uses his or her private key to decrypt it.

Digital certificates are data files used to establish the identity of users and electronic assets for protection of online transactions (see Figure 8.7). A digital certificate system uses a trusted third party, known as a certificate authority (CA), to validate a user’s identity. There are many CAs in the United States and around the world, including Symantec, GoDaddy, and Comodo.

The CA verifies a digital certificate user’s identity offline. This information is put into a CA server, which generates an encrypted digital certificate containing owner identification information and a copy of the owner’s public key. The certificate authenticates that the public key belongs to the designated owner.

The CA makes its own public key available either in print or perhaps on the Internet. The recipient of an encrypted message uses the CA’s public key to decode the digital certificate attached to the message, verifies it was issued by the CA, and then obtains the sender’s public key and identification information contained in the certificate. By using this information, the recipient can send an encrypted reply. The digital certificate system would enable, for example, a credit card user and a merchant to validate that their digital certificates were issued by an authorized and trusted third party before they exchange data. Public key infrastructure (PKI), the use of public key cryptography working with a CA, is now widely used in e-commerce.

5. Securing Transactions with Blockchain

Blockchain, which we introduced in Chapter 6, is gaining attention as an alternative approach for securing transactions and establishing trust among multiple parties. A blockchain is a chain of digital “blocks” that contain records of transactions. Each block is connected to all the blocks before and after it, and the blockchains are continually updated and kept in sync This makes it difficult to tamper with a single record because one would have to change the block containing that record as well as those linked to it to avoid detection.

Once recorded, a blockchain transaction cannot be changed. The records in a blockchain are secured through cryptography, and all transactions are encrypted. Blockchain network participants have their own private keys that are assigned to the transactions they create and act as a personal digital signature. If a record is altered, the signature will become invalid, and the blockchain network will know immediately that something is amiss. Because blockchains aren’t contained in a central location, they don’t have a single point of failure and cannot be changed from a single computer. Blockchain is especially suitable for environments with high security requirements and mutually unknown actors.

6. Ensuring System Availability

As companies increasingly rely on digital networks for revenue and operations, they need to take additional steps to ensure that their systems and applications are always available. Firms such as those in the airline and financial services industries with critical applications requiring online transaction processing have traditionally used fault-tolerant computer systems for many years to ensure 100 percent availability. In online transaction processing, transactions entered online are immediately processed by the computer. Multitudinous changes to databases, reporting, and requests for information occur each instant.

Fault-tolerant computer systems contain redundant hardware, software, and power supply components that create an environment that provides continuous, uninterrupted service. Fault-tolerant computers use special software routines or self-checking logic built into their circuitry to detect hardware failures and automatically switch to a backup device. Parts from these computers can be removed and repaired without disruption to the computer or downtime. Downtime refers to periods of time in which a system is not operational.

6.1. Controlling Network Traffic: Deep Packet Inspection

Have you ever tried to use your campus network and found that it was very slow? It may be because your fellow students are using the network to download music or watch YouTube. Bandwidth-consuming applications such as file-sharing programs, Internet phone service, and online video can clog and slow down corporate networks, degrading performance. A technology called deep packet inspection (DPI) helps solve this problem. DPI examines data files and sorts out low-priority online material while assigning higher priority to business-critical files. Based on the priorities established by a network’s operators, it decides whether a specific data packet can continue to its destination or should be blocked or delayed while more important traffic proceeds.

6.2. Security Outsourcing

Many companies, especially small businesses, lack the resources or expertise to provide a secure high-availability computing environment on their own. They can outsource many security functions to managed security service providers (MSSPs) that monitor network activity and perform vulnerability testing and intrusion detection. SecureWorks, AT&T, Verizon, IBM, Perimeter eSecurity, and Symantec are leading providers of MSSP services.

7. Security Issues for Cloud Computing and the Mobile Digital Platform

Although cloud computing and the emerging mobile digital platform have the potential to deliver powerful benefits, they pose new challenges to system security and reliability. We now describe some of these challenges and how they should be addressed.

7.1. Security in the Cloud

When processing takes place in the cloud, accountability and responsibility for protection of sensitive data still reside with the company owning that data. Understanding how the cloud computing provider organizes its services and manages the data is critical (see the Interactive Session on Management).

Cloud computing is highly distributed. Cloud applications reside in large remote data centers and server farms that supply business services and data management for multiple corporate clients. To save money and keep costs low, cloud computing providers often distribute work to data centers around the globe where work can be accomplished most efficiently. When you use the cloud, you may not know precisely where your data are being hosted.

Virtually all cloud providers use encryption to secure the data they handle while the data are being transmitted. However, if the data are stored on devices that also store other companies’ data, it’s important to ensure that these stored data are encrypted as well. DDoS attacks are especially harmful because they render cloud services unavailable to legitimate customers.

Companies expect their systems to be running 24/7. Cloud providers still experience occasional outages, but their reliability has increased to the point where a number of large companies are using cloud services for part of their IT infrastructures. Most keep their critical systems in-house or in private clouds.

Cloud users need to confirm that regardless of where their data are stored, they are protected at a level that meets their corporate requirements. They should stipulate that the cloud provider store and process data in specific jurisdictions according to the privacy rules of those jurisdictions. Cloud clients should find how the cloud provider segregates their corporate data from those of other companies and ask for proof that encryption mechanisms are sound. It’s also important to know how the cloud provider will respond if a disaster strikes, whether the provider will be able to restore your data completely, and how long this should take. Cloud users should also ask whether cloud providers will submit to external audits and security certifications. These kinds of controls can be written into the service level agreement (SLA) before signing with a cloud provider. The Cloud Security Alliance (CSA) has created industrywide standards for cloud security, specifying best practices to secure cloud computing.

7.2. Securing Mobile Platforms

If mobile devices are performing many of the functions of computers, they need to be secured like desktops and laptops against malware, theft, accidental loss, unauthorized access, and hacking attempts. Mobile devices accessing corporate systems and data require special protection. Companies should make sure that their corporate security policy includes mobile devices, with additional details on how mobile devices should be supported, protected, and used. They will need mobile device management tools to authorize all devices in use; to maintain accurate inventory records on all mobile devices, users, and applications; to control updates to applications; and to lock down or erase lost or stolen devices so they can’t be compromised. Data loss prevention technology can identify where critical data are saved, who is accessing the data, how data are leaving the company, and where the data are going. Firms should develop guidelines stipulating approved mobile platforms and software applications as well as the required software and procedures for remote access of corporate systems. The organization’s mobile security policy should forbid employees from using unsecured, consumer- based applications for transferring and storing corporate documents and files or sending such documents and files to oneself by email without encryption. Companies should encrypt communication whenever possible. All mobile device users should be required to use the password feature found in every smartphone.

8. Ensuring Software Quality

In addition to implementing effective security and controls, organizations can improve system quality and reliability by employing software metrics and rigorous software testing. Software metrics are objective assessments of the system in the form of quantified measurements. Ongoing use of metrics allows the information systems department and end users to measure the performance of the system jointly and identify problems as they occur. Examples of software metrics include the number of transactions that can be processed in a specified unit of time, online response time, the number of payroll checks printed per hour, and the number of known bugs per hundred lines of program code. For metrics to be successful, they must be carefully designed, formal, objective, and used consistently.

Early, regular, and thorough testing will contribute significantly to system quality. Many view testing as a way to prove the correctness of work they have done. In fact, we know that all sizable software is riddled with errors, and we must test to uncover these errors.

Good testing begins before a software program is even written, by using a walkthrough—a review of a specification or design document by a small group of people carefully selected based on the skills needed for the particular objectives being tested. When developers start writing software programs, coding walkthroughs can also be used to review program code. However, code must be tested by computer runs. When errors are discovered, the source is found and eliminated through a process called debugging. You can find out more about the various stages of testing required to put an information system into operation in Chapter 12. Our Learning Tracks also contain descriptions of methodologies for developing software programs that contribute to software quality.

Source: Laudon Kenneth C., Laudon Jane Price (2020), Management Information Systems: Managing the Digital Firm, Pearson; 16th edition.

Hi there every one, here every one is sharing such knowledge,

therefore it’s good to read this blog, and I used to pay a visit this webpage every day.

I like this site so much, saved to my bookmarks.