Notwithstanding the considerable flexibility of a regression framework and the SEM approach for moderator analysis in meta-analysis, you should consider three potential limits when drawing conclusions from moderator analyses.

1. Empirically confounded Moderators

Just as you want to avoid highly correlated predictors in a multiple regression analysis of primary data, it is important to ensure that the moderator variables (i.e., predictors) are not too highly correlated in meta-analysis. If they are, then two problems can emerge. First, it might be difficult to detect the unique association of a moderator above and beyond the other highly correlated moderators. Second, if they are extremely highly correlated, you can get inaccurate regression estimates that have large standard errors (the so-called bouncing beta problem).

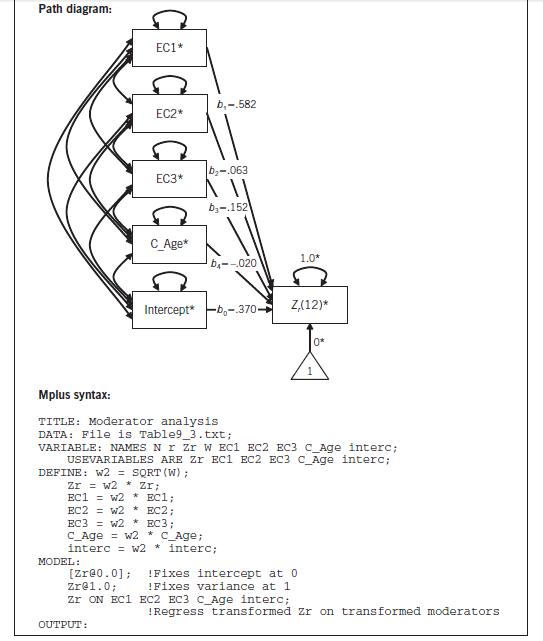

Fortunately, it is easy—though somewhat time-consuming—to evaluate multicolinearity in meta-analytic moderator analyses. To do so, you regress each moderator (predictor) onto the set of all other moderators, weighted by the same weights (i.e., inverse variances of effect size estimates) as you have used in the moderator analyses. To illustrate using the example data shown in Table 9.3, I would regress age onto the three dummy variables representing the four categorical methods of assessing aggression. Here, R2 = .41, far less than the .90 that is often considered too high (e.g., Cohen et al., 2003, p. 424). I would then repeat the process for other moderator variables, successively regressing (weighted by w) them on the other moderator variables.

2. Conceptually Confounded (Proxy) Moderators

A more difficult situation is that of uncoded confounded moderators. These include a large range of other study characteristics that might be correlated across studies with the variables you have coded. For example, studying a particular type of sample (e.g., adolescents vs. young children) might be associated with particular methodological features (e.g., using self-reports vs. observations; if I had failed to code this methodology, then this feature would potentially be an uncoded confounded moderator). Here, results indicating moderation by the sample characteristics might actually be due to moderation by methodology. Put differently, the moderator in my analysis is only a proxy for the true moderator. Moreover, because the actual moderator (type of measure) is conceptually very different from the moderator I actually tested (age), my conclusion would be seriously compromised if I failed to consider this possibility.

There is no way to entirely avoid this problem of conceptually confounded, or proxy, moderators. But you can reduce the threat it presents by coding as many alternative moderator variables as possible (see Chapter 5). If you find evidence of moderation after controlling for a plausible alternative moderator, then you have greater confidence that you have found the true moderator (whereas if you did not code the alternative moderator, you could not empirically evaluate this possibility). At the same time, a large number of alternative possibilities might be argued to be the true moderator, of which the predictor you have considered is just a proxy, and it is impossible to anticipate and code all of these possibilities. For this reason, some argue that findings of moderation in meta-analysis are merely suggestive of moderation, but require replication in primary studies where confounding variables could arguably be better controlled. I do not think there is a universal answer for how informative moderator results from meta-analysis are; I think it depends on the conceptual arguments that can be made for the analyzed moderator versus other, unanalyzed moderators, as well as the diversity of the existing studies in using the analyzed moderator across a range of samples, methodologies, and measures. Despite the ambiguities inherent in meta-analytic moderator effects, assessing conceptually reasonable moderators is a worthwhile goal in most meta-analyses in which effect sizes are heterogeneous (see Chapter 8).

3. Ensuring Adequate Coverage in Moderator Analyses

When examining and interpreting moderators, an important consideration is the coverage, or the extent to which numerous studies represent the range of potential moderator values considered. The literature on meta-analysis has not provided clear guidance on what constitutes adequate coverage, so this evaluation is more subjective than might be desired. Nevertheless, I try to offer my advice and suggestions based on my own experience.

As a first step, I suggest creating simple tables or plots showing the number of studies at various levels of the moderator variables. If you are testing only the main effects of the moderators, it is adequate to look at just the univariate distributions.11 For example, in the meta-analysis of Table 9.3, I might create frequency tables or bar charts of the methods of assessing aggression, and similar charts of the continuous variable age categorized into some meaningful units (e.g., early childhood, middle childhood, early adolescence, and middle adolescence; or simply into, e.g., 2-year bins). Whether or not you report these tables or charts in a manuscript, they are extremely useful in helping you to evaluate the extent of coverage. Considering the method of assessing aggression, I see that these data contained a reasonable number of effect sizes from peer- (k = 17) and teacher- (k = 6) report methods, but fewer from observations (k = 3) and only one using parent reports. Similarly, examining the distribution of age among these effect sizes suggests a gap in the early adolescence range (i.e., no studies between 9.5 and 14.5 years).

What constitutes adequate coverage? Unfortunately, there are no clear answers to this question, as it depends on the overall size of your metaanalysis, the correlations among moderators, the similarity of your included studies on characteristics not coded, and the conceptual certainty that the moderator considered is the true moderator rather than a proxy. At an extreme, one study representing a level of a moderator (e.g., the single study using parent report in this example) or one study in a broad area of a continuous moderator (e.g., if there was only one study during early childhood) is not adequate coverage, as it is impossible to know what other features of that study are also different from those of the rest of the studies. Conversely, five studies covering an area of a moderator probably constitute adequate coverage for most purposes (again, I base this recommendation on my own experience; I do not think that any studies have more formally evaluated this claim). Beyond these general points of reference, the best advice I can provide is to carefully consider these studies: Do they all provide similar effect sizes? Do they vary among one another in other characteristics (which might point to the generalizability of these studies for this region of the moderator)? Are the studies comparable to the studies at other levels of the moderator (if not, then it becomes impossible to determine whether the presumed moderator is a true or proxy moderator)?

Source: Card Noel A. (2015), Applied Meta-Analysis for Social Science Research, The Guilford Press; Annotated edition.

Thanks for sharing superb informations. Your site is very cool. I am impressed by the details that you¦ve on this site. It reveals how nicely you understand this subject. Bookmarked this web page, will come back for more articles. You, my pal, ROCK! I found simply the information I already searched all over the place and just couldn’t come across. What an ideal site.