When we have two nominal categorical variables with the same values (usually two raters’ observations or scores using the same codes), we can compute Cohen’s kappa to check the reliability or agreement between the measures. Cohen’s kappa is preferable over simple percentage agreement because it corrects for the probability that raters will agree due to chance alone. In the hsbdataNew, the variable ethnicity is the ethnicity of the student as reported in the school records. The variable ethnicity reported by student is the ethnicity of the student as reported by the student. Thus, we can compute Cohen’s kappa to check the agreement between these two nominal ratings.

- What is the reliability coefficient for the ethnicity codes (based on school records) and ethnicity reported by the student?

To compute the kappa:

- Click on Analyze → Descriptive Statistics → Crosstabs.

- Move ethnicity to the Rows box and ethnicity reported by students to the Columns

- Click Statistics… This will open the Crosstabs: Statistics dialog box.

- Click on Kappa.

- Click on Continue to go back to the Crosstabs dialog window.

- Then click on Cells… and request the Observed under Counts and Total under Percentages.

- Click on Continue and then OK. Compare your syntax and output with Output 4.6.

Output 3.6: Cohen’s Kappa With Nominal Data

CROSSTABS

/TABLES=ethnic BY ethnic2

/FORMAT= AVALUE TABLES

/STATISTIC=KAPPA

/CELLS= COUNT TOTAL

/COUNT ROUND CELL.

Crosstabs

Interpretation of Output 3.6

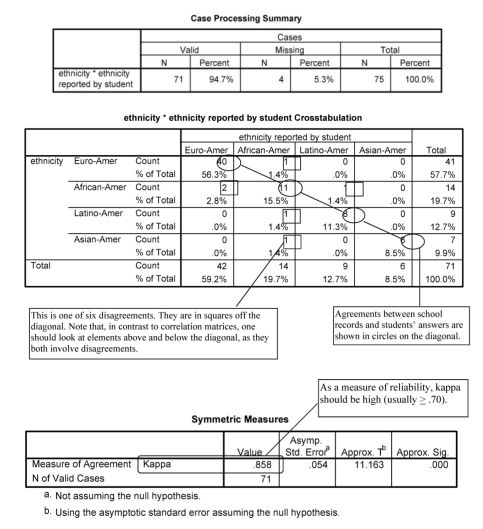

The Case Processing Summary table shows that 71 students have data on both variables and 4 students have missing data. The Cross-tabulation table of ethnicity and ethnicity reported by student is next. The cases where the school records and the student agree are on the diagonal and circled. There are 65 (40 + 11 + 8 + 6) students with such agreement or consistency. The Symmetric Measures table shows that kappa = .86, which is very good. Because kappa is a measure of reliability, it usually should be .70 or greater. Because we are not doing inferential statistics (we are not inferring this result is indicative of relationships in a larger population), we are not concerned with the significance value. However, because it corrects for chance, the value of kappa tends to be somewhat lower than some other measures of interobserver reliability, such as percentage agreement.

Source: Leech Nancy L. (2014), IBM SPSS for Intermediate Statistics, Routledge; 5th edition;

download Datasets and Materials.

27 Mar 2023

19 Sep 2022

14 Sep 2022

16 Sep 2022

21 Sep 2022

29 Mar 2023